Import Listings

Usability testing to optimize the design of an AI cross-listing tool, resulting in 3x new daily listings.

Simplifying Cross-Listing for Mercari's High Volume Sellers

Mercari sought to streamline the cross-listing experience for high volume sellers—those who manage large inventories and use multiple platforms to maximize their sales. Prior to this project, sellers were burdened by manual processes that slowed down their ability to list items efficiently across different sites. During Mercari’s bi-annual hackathon, an engineer proposed an idea to simplify this by creating an import tool that could pull metadata from other sites and easily populate Mercari’s listing form. The tool was designed to empower these experienced sellers, but to truly succeed, it needed to be intuitive, easy to navigate, and deeply aligned with the needs of high volume sellers.

Company

Mercari US

Timeframe

Sep - Dec 2023

Role

UX Researcher

Overview

Mercari sought to empower experienced sellers—those managing large inventories and regularly cross-listing items across multiple resale platforms—to list items as easily and efficiently as possible. Many of these high volume sellers use multiple platforms, like eBay, to maximize exposure and increase their chances of making a sale. However, these sellers often find the cross-listing process cumbersome, requiring too much manual effort and discouraging them from fully leveraging cross-listing to its potential. Recognizing this, Mercari introduced an idea during its annual hackathon, Go Bold Day, where an engineer proposed using a large language model (LLM) tool to simplify the process by porting over high-quality listings from other sites like eBay. This proposal had great potential, but to succeed, the tool needed to be intuitive, seamless, and aligned with the needs of these high volume sellers.

I was brought in as the UX researcher to support the development of this tool, shaping its feature design based on real user feedback and advocating for the sellers’ needs throughout the process.

The Research Process

Defining our target end user

To understand the workflows of high volume sellers, I first worked with our project team to align on our definition of a high volume seller. Using analytics, we decided that a high volume seller would be considered someone who listed, not sold, at least 30 items per month. This was fairly drastic considering that the typical Mercari user listed about 6 items at one time, and typically these listing periods would occur seasonally through the year.

To help better characterize our target users, I designed a survey to gather more information about what kinds of platforms or third-party tools these high volumes might use to sell items online. This was also used as a recruitment tool for the next part of the study.

Contextual Inquiry

I then conducted a contextual inquiry study observing the listing process for experienced eBay sellers who regularly list across multiple platforms. These conversations revealed their frustrations with current cross-listing tools and helped me uncover what would make the process smoother. Many sellers expressed skepticism toward automated listing tools, as they had experienced past tools that promised ease but ultimately didn’t deliver. They preferred spending more time upfront ensuring listings were done right, rather than dealing with complications later. This taught me that for this tool to be successful, it had to focus on clarity at every step—helping sellers seamlessly review items, including descriptions, photos, and shipping details—and provide trustworthy, step-by-step guidance throughout the process.

Usability Testing

Building off the contextual inquiry study, we then moved forward to usability testing to examine how high volume sellers interacted with our prototype feature. I ran unmoderated tests with eight experienced sellers—each listing at least 30 items per month. Participants were asked to “think aloud” as they interacted with the tool, which helped me understand their thought processes, frustrations, and key challenges. The usability tests revealed several pain points:

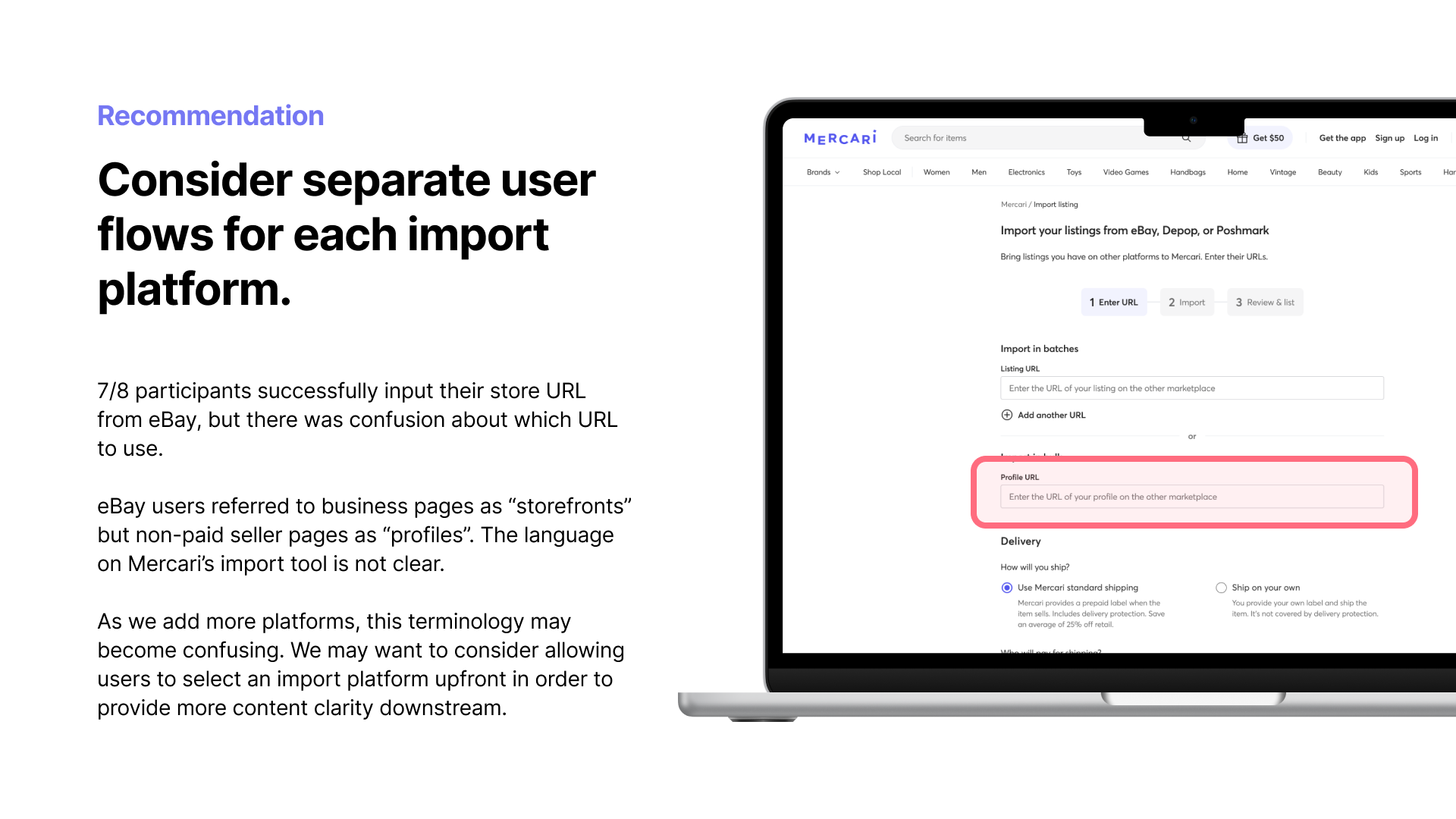

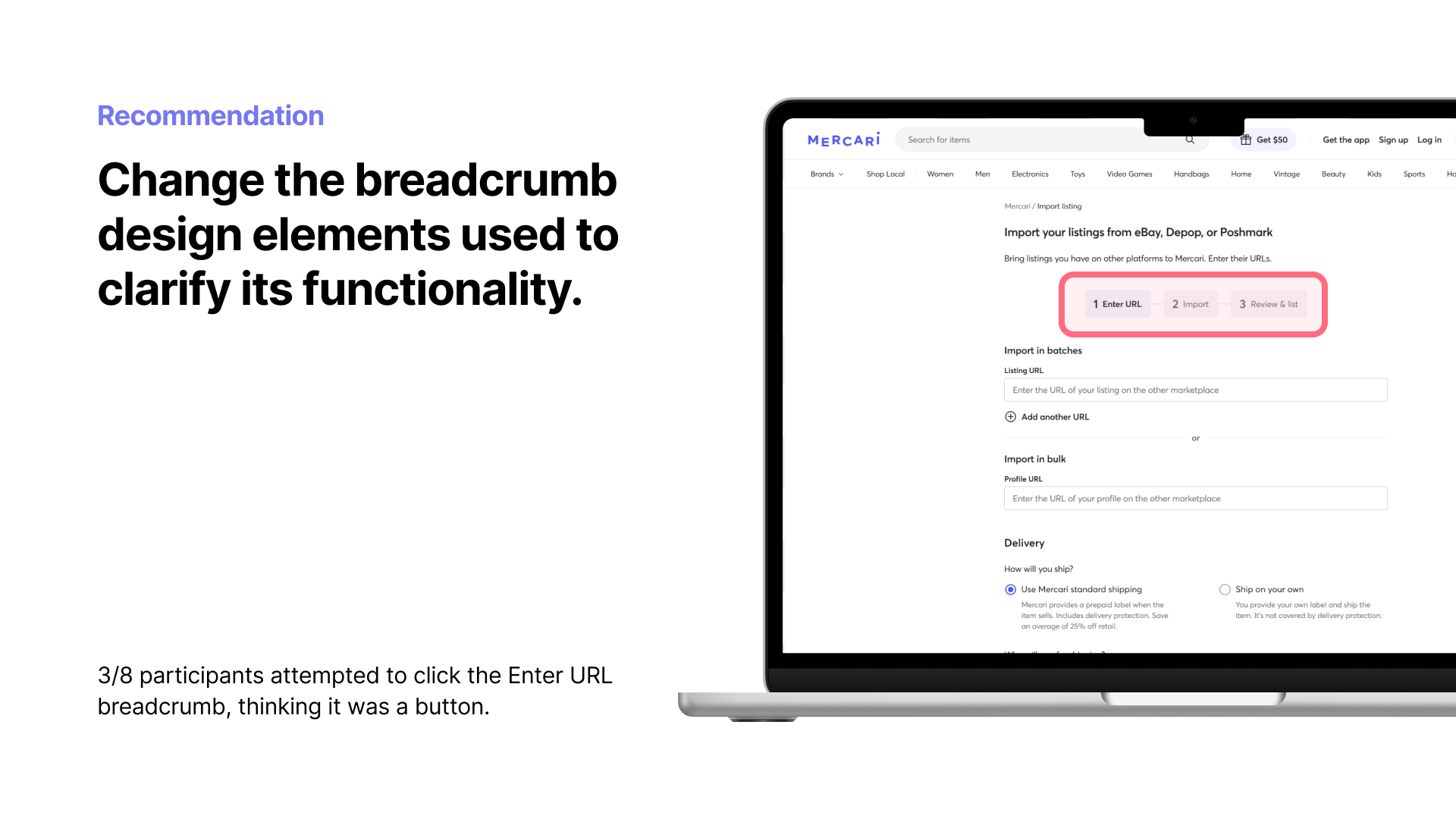

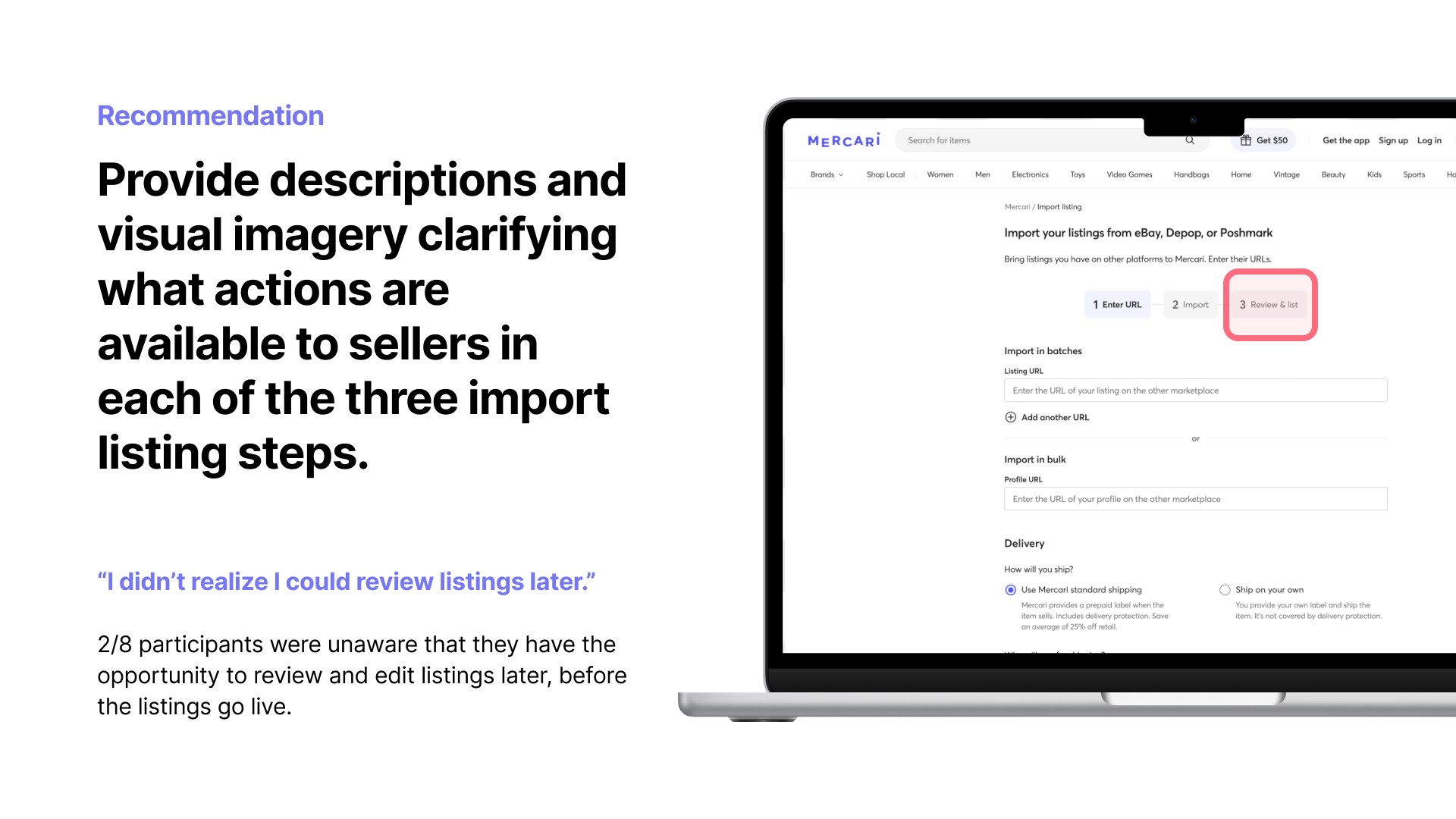

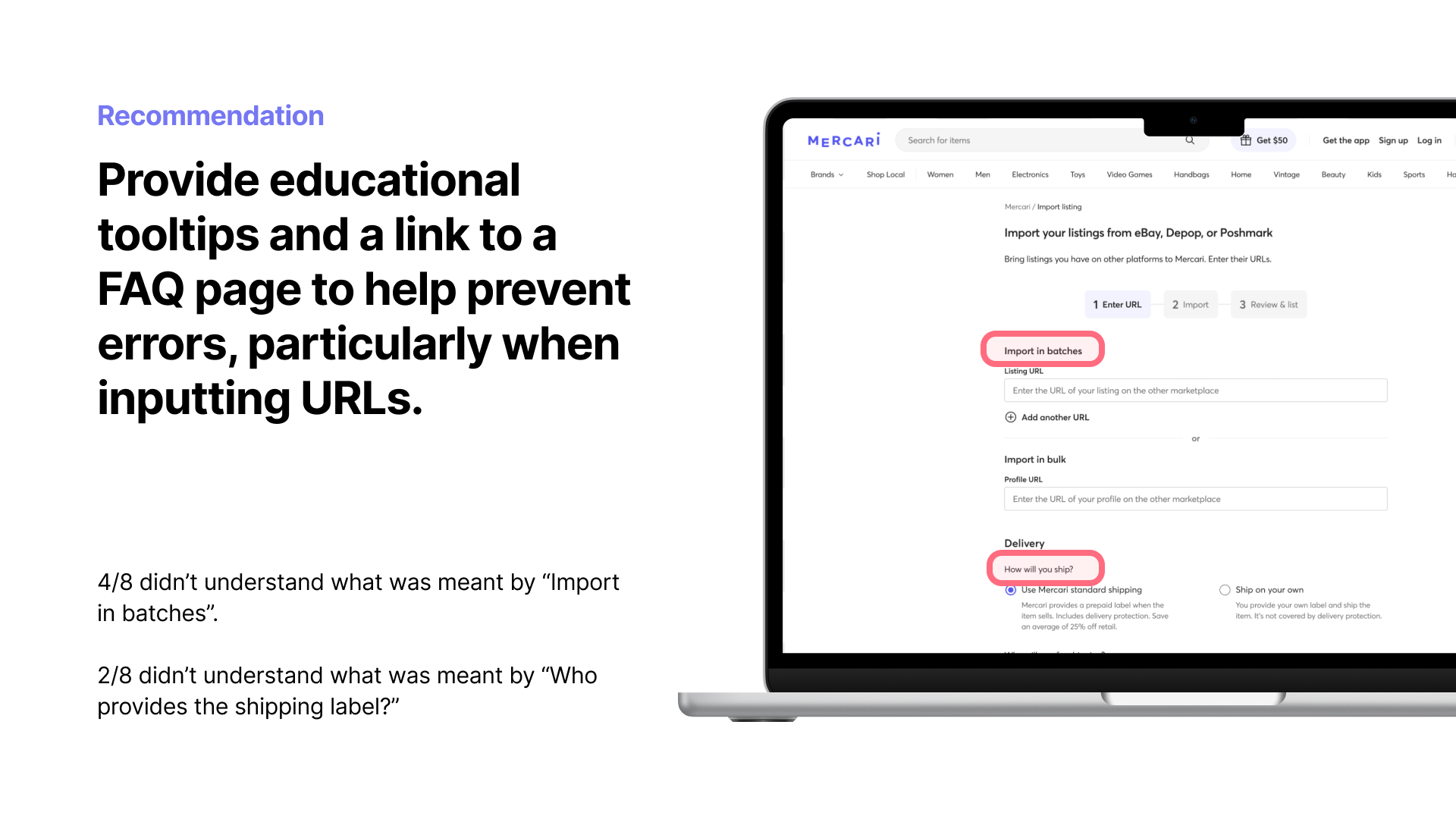

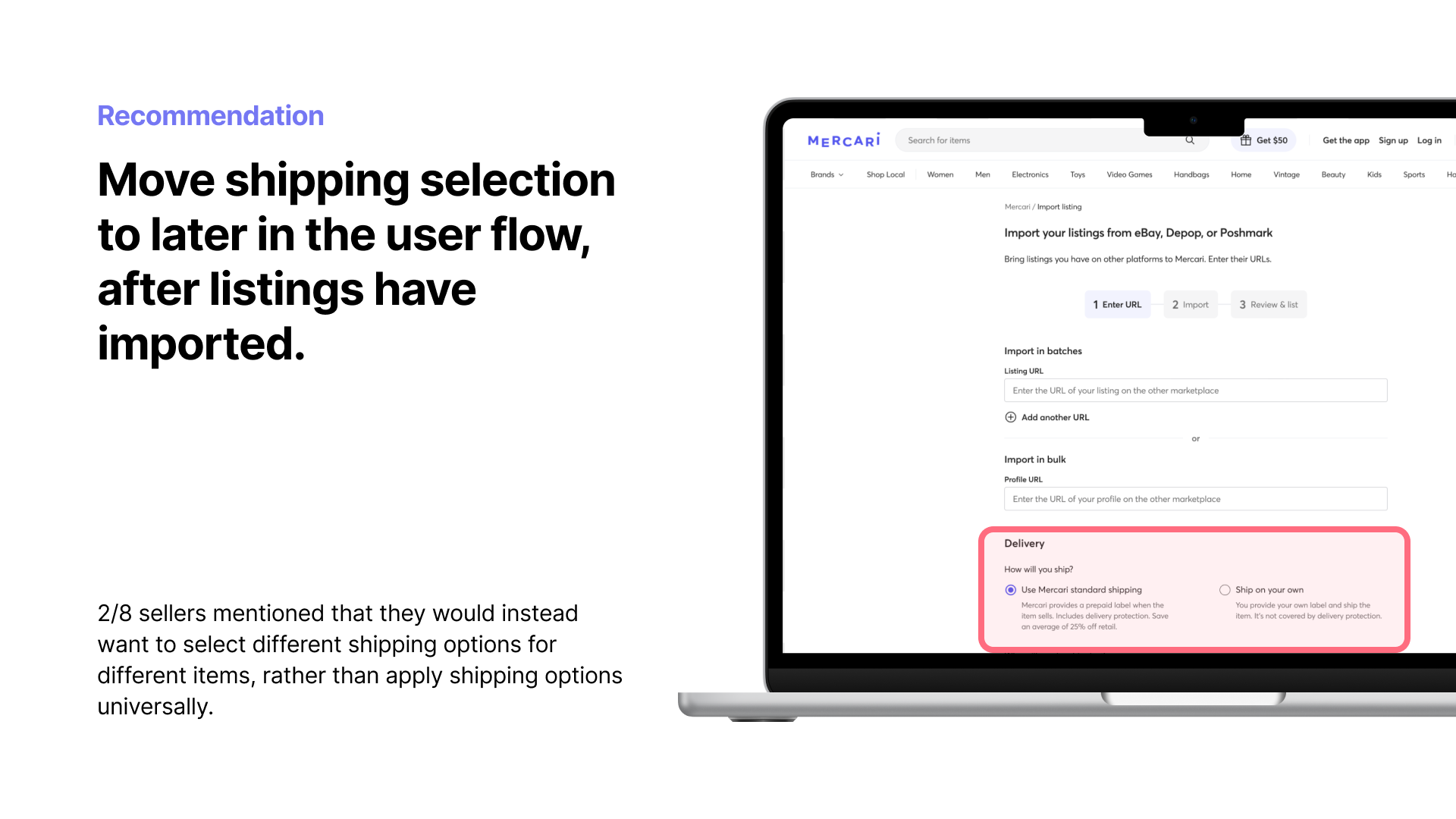

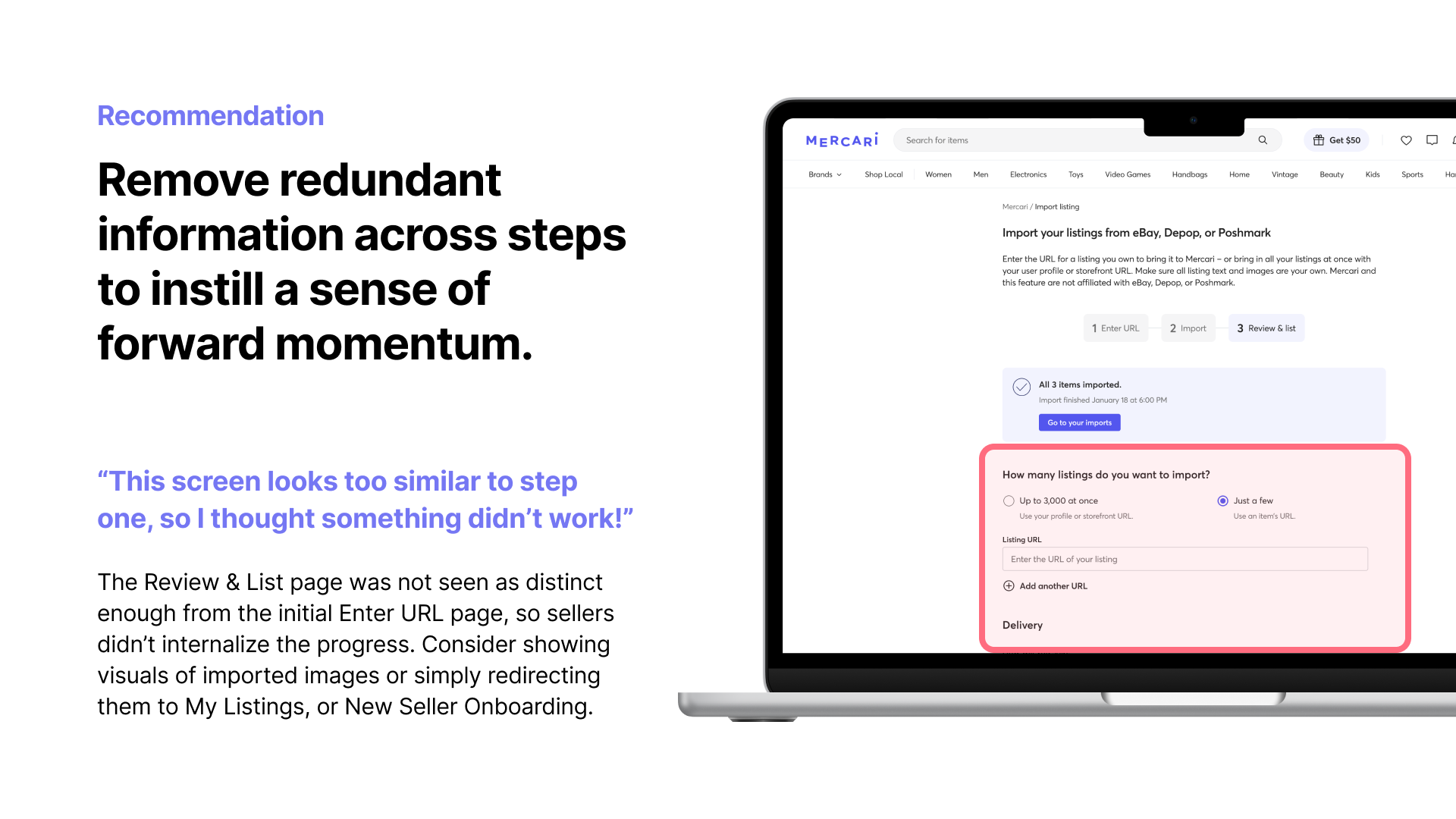

- Task 1: Completing the Import Form. Sellers were confused about which URLs to input, struggled with choosing shipping options, and lacked clarity around the review step. Some mistakenly clicked the "Enter URL" breadcrumb, thinking it was a button.

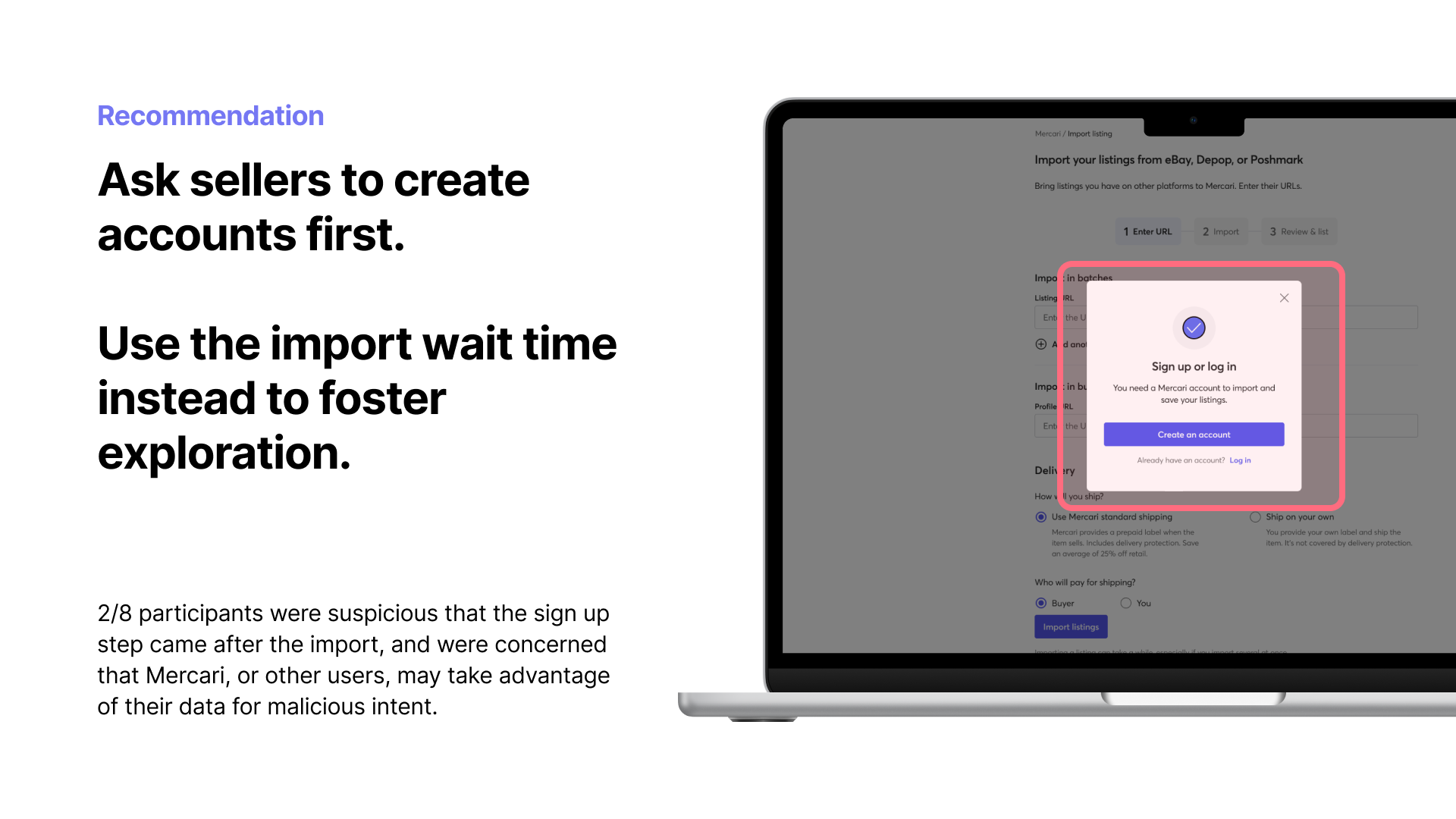

- Task 2: Reviewing Imports. Sellers were hesitant about having to input URLs before account registration, leading to trust issues.

- Task 3: Activating Listings. Users had difficulty accessing shipping details for each item, were unaware of bulk edit functionality, and couldn’t easily edit imported metadata.

- Task 4: Accessing Listings. Sellers didn’t realize they could apply bulk edits to multiple listings at once, leading to inefficient workflows.

Findings

These usability tests provided deep insights into what high volume sellers needed in order to adopt the tool successfully. Many sellers preferred tools that fit into their existing routines—something that felt familiar and intuitive. Sellers valued flexibility, but they also needed clear guidance and trust-building features to feel confident using the tool.

It became clear that many sellers were cautious about automated listing tools because they had been disappointed by similar tools in the past. Building trust—through clear instructions, visual progress indicators, and transparent explanations—was essential to getting them to use the tool regularly.

Recommendations

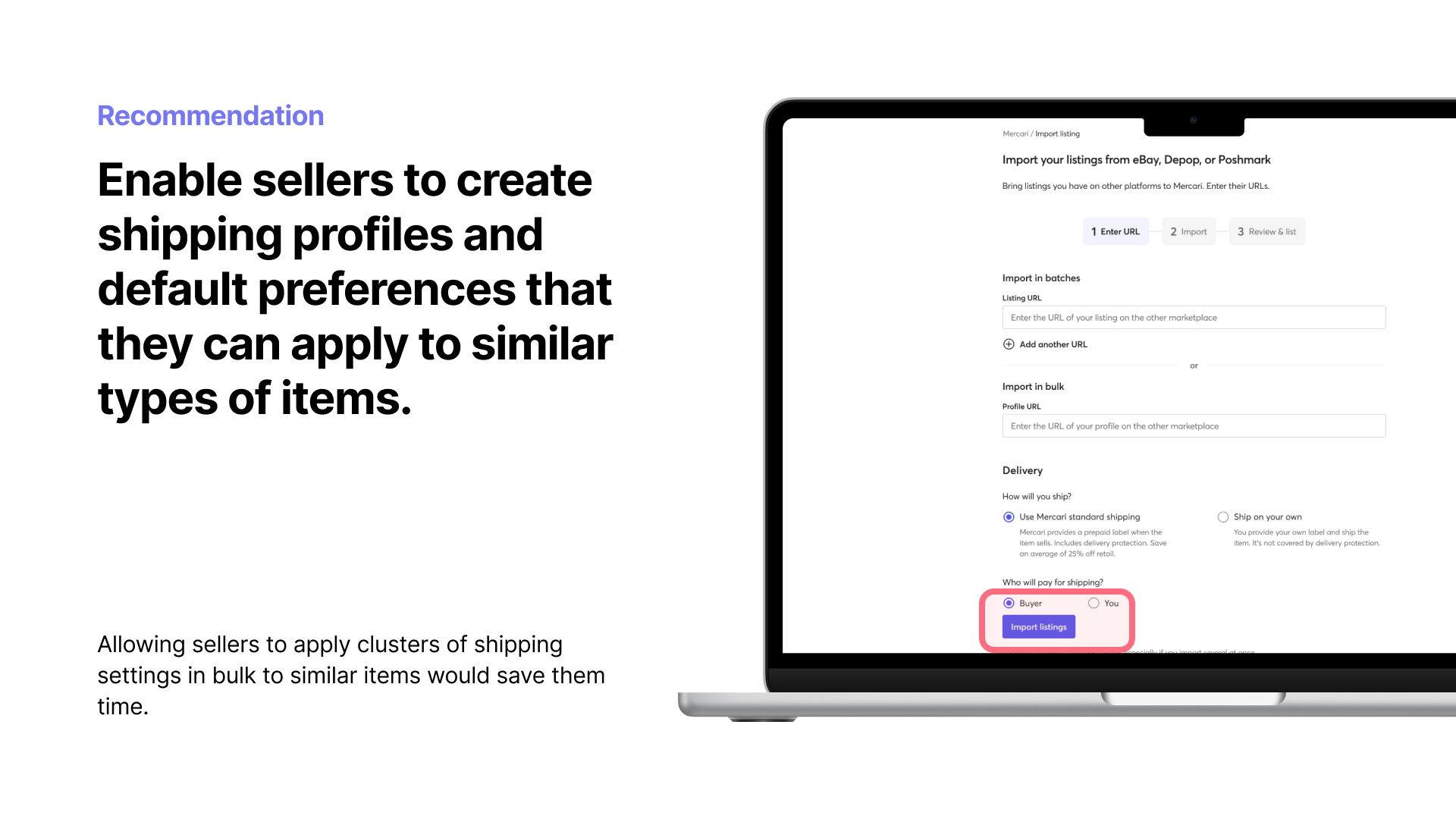

Based on these insights, I recommended several design improvements:

- Separate user flows for each import platform to avoid confusion and ensure that sellers could use their preferred method.

- Enhance visual hierarchy with clearer breadcrumbs and more effective tooltips to guide users through each step.

- Move shipping options to appear later in the process, after listings have been successfully imported.

- Include educational content like FAQs to provide additional guidance for sellers navigating the tool.

Outcome

The release of the Import Listing feature led to:

- 3x increase in new daily listings.

- 395,000 new listings in the first month after launch.

- 1,000 new high volume sellers using the import tool within the first month.

Setbacks

Unfortunately, we weren’t able to fully implement all of the recommended improvements for reviewing listings post-import. When the feature launched, we saw a significant spike in new listings, but many of those listings ended up sitting in sellers’ drafts—not yet live on the marketplace. We also saw mixed feedback from the post-launch live intercept survey I designed, where sellers reported frustration with metadata not importing accurately. This became a key issue I couldn’t fully address as I transitioned to a new project team. It highlighted the opportunity to advocate more strongly for research insights during post-research handoffs—something I plan to focus on improving moving forward.

New Insights

While the feature that launched wasn't aligned with my hopes for creating a seamless post-listing experience, we learned some valuable insights from this project that carried forward to pretty cool new developments. First, this project changed the way we thought about using AI to enhance listing experiences. While we already knew that the accuracy of the model has a tremendous impact on users' perceived value of an AI-powered experience, this was the first time we began to rethink how to incorporate AI in other ways. While we weren't able to execute our vision for this feature, we learned that using AI on the backend to improve item discoverability became a huge asset for our platform, shaping the way we designed search, browsing, and multi-item listing experiences. The search AI improvements alone increased the number of items ordered from the search page in previously underperforming categories by 13% within a few weeks.

I would have loved to experiment more with this concept, thinking more about how we could provide more transparency for sellers about how AI is used to improve item discovery and how they can best work with the technology rather than against it.

Looking Back

This project highlighted the importance of being proactive in turning research insights into actionable UX recommendations. I learned that simply presenting findings isn’t enough—especially when it comes to complex workflows. For this project, I tried running a virtual research poster session, where the team watched clips from usability testing with high volume sellers interacting with the tool and attempted to come to their own conclusions. While this did provide designers with firsthand exposure to user feedback, it wasn’t as fruitful as I had hoped. Designers needed more concrete UX guidance—clear, actionable steps that could immediately shape the next iteration of the tool.

Additionally, when the feature was rolled out, the pressure from leadership to launch quickly, despite our design team's cautions, became a significant challenge. The focus shifted to getting the tool live, with less regard for ensuring sellers could activate their listings smoothly after import. This resulted in a lot of listings being left in draft mode—something our design team had flagged when negotiating development timelines. Unfortunately, our recommendations about prioritizing improvements to the draft review process were overlooked in favor of speed.

From this experience, I learned the importance of advocating for long-term usability and ensuring that design teams have the bandwidth to implement thoughtful changes. It became clear that communicating the value of iterative improvements and pushing back against short-term pressures is critical to ensuring successful outcomes. In the future, I’ll focus on building stronger alignment with leadership by clearly linking research findings to business outcomes—highlighting the long-term impact of well-considered design choices, rather than simply rushing to meet deadlines.